According to Brian Kernighan, “Everyone knows that debugging is twice as hard as writing a program in the first place. So if you’re as clever as you can be when you write it, how will you ever debug it?” With that in mind I’m re-exposing myself to the spaghetti that is the Aihato WordPress code base.

My last comment on that project stems from December of 2010, although there have been some minor code updates in the meantime—not all of them by me—mostly having to do with the look and feel.

During my Christmas break, I visited the Ytec office to meet with their sysadmin. He made the old Subversion repository available to me again (although, internally, they’ve gone over to Bazaar) and created a new development version of the site (which hadn’t been moved along with the move of the production version to a new server). After some changes to my Makefile, I’m now able to do the whole development/production dance again, and I’ve just tested this by successfully upgrading to WordPress 3.5.1 (all the way from 3.0.5). Yes, this is quite a simple affair, if your setup is 1337:

Upgrading WordPress

I really like it better when the WordPress factory stuff lives in its own directory. I like this better even than using vendor branches to keep track of what’s mine and what’s WordPress’s. Let me show you why (and yes, I could have turned this into a proper script, if I wanted to):

# Get into my working dir

www.aihato.nl

# Add the new WP version to the externals

svn propget svn:externals . > /tmp/wp-ext 'wp-3.5.1 http://core.svn.wordpress.org/tags/3.5.1/' >> /tmp/wp-ext

svn update

svn propset svn:externals --file /tmp/wp-ext

rm wp # The symbolic link pointing to the old wp-3.0.5

ln -s wp-3.5.1 wp

make update-development # to test if the upgrade will fuck up anything major

make deploy-production # because it works

svn ci -m "Upgraded WP from wp-3.0.5 to wp-3.5.1."

Rewrite problems after upgrade

Of course, the upgrade had to cause some trouble. This particular trouble was caused by some code in my theme’s functions.php that really belonged in a plugin. I basically made a rogue call to $wp_rewrite->flush_rules(); which caused the rewrite rules to either lack the ones concerning my custom category base (‘/categorie’) or those concerning my custom rewrite endpoints.

To fix this I created an exceedingly simple plugin with just the following code:

add_action('init', 'gallery_endpoint_initialize');

register_activation_hook( __FILE__, function() {

global $wp_rewrite;

add_rewrite_endpoint('gallery', EP_PERMALINK | EP_PAGES);

$wp_rewrite->flush_rules();

});

function gallery_endpoint_initialize() {

add_rewrite_endpoint('gallery', EP_PERMALINK | EP_PAGES);

add_filter('query_vars', 'aihato_queryvars');

}

function aihato_queryvars($qvars) {

$qvars[] = 'gallery';

return $qvars;

}

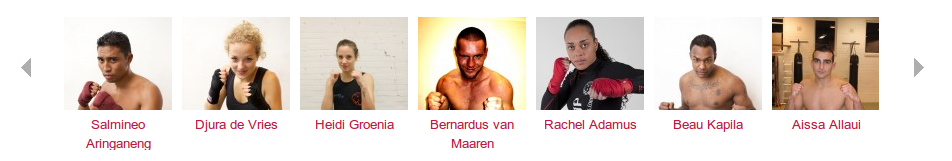

Thoughts on custom post types for fighter profiles

I commented on custom post types before, in the section on fighter profiles in the extensive summary of the whole development process, and in a comment in my development notes.

The custom post type for fighter profiles that I started implementing as a plugin is still quite high on my feature wishlist. The complex dance with page parent, page template and custom fields has proven to be quite difficult for the guys.

At the time, a real showstopper “bug” was that the featured image box wasn’t displayed with the post type. Now, it took me approximately five minutes to find out that I should have simply added the custom post type to the add_theme_support() call in my theme’s functions.php:

add_theme_support('post-thumbnails', array('post', 'page', 'aihato_fighter'));

Another problem “was that clicking the Insert image button replaced the current page with the upload dialog instead of loading it in a modal dialog through AJAX.” This seems to be resolved in the new WordPress, so postponing the custom post type stuff until it had stabilized a bit in the future has proven to be an excellent strategy. Apparently, that future has arrived.

I do wonder: if I implement the custom post type for fighter profiles now, how do I convert my existing page content to the custom post type? Could I just change the post_type field in the database after adding the necessary function calls to register this new type?

Also, I’m thinking of turning some of the custom fields not just into fancy boxes in the post edit GUI, but into proper taxonomies. This might complicate migration even further, so it’s probably the wisest course to do this thing in phases:

- Implement the custom post types with integrated support in the GUI for the custom fields that will remain custom fields under the hood.

- Maybe migrate some custom fields (such as fighter discipline) to proper taxonomies.

- Modify the theme to use the new custom post type instead of the old pages.

- Integrate the fight record stuff with the aihato-events stuff.

The process of moving to fighter profiles to their own custom post type deserves a dedicated post, but this’ll have to wait until I actually continue this process.

Thoughts on improving the photo gallery

There are some annoying bugs in the photo gallery. I want to start out by upgrading all the relevant JavaScript libraries that I used to see how far that will bring me.

Performance-wise, there’s also some low-hanging fruit. Photo’s are often uploaded in high resolution. Instead of forbidding this, I want this to be performance neutral in its effect by, instead of using the full version of the image in many places (such as the gallery, using a huge-ass thumbnail that serves as the “enlarged” version when you click pictures in the gallery and such.

Thoughts on improving the video gallery

With regards to the video gallery, it has turned out the YouTube support is not sufficient. I will have to find a way to base the gallery on all oEmbed links. I have, since initial deployment, already added embed (not oEmbed) support for fightstartv.com:

wp_embed_register_handler('fightstartv', '|http://www\.fightstartv\.com/videos/([a-zA-Z0-9-_]+)/|', 'embed_handler_fightstartv');

function embed_handler_fightstartv($matches, $attr, $url, $rawattr) {

$fstv_html = file_get_contents($url);

$fstv_html_matches = array();

preg_match('|"(/upload/[^"/]+.mp4)".*"image": "([^"]+)"|', $fstv_html, $fstv_html_matches);

$fstv_mp4_url = 'http://www.fightstartv.com' . $fstv_html_matches[1];

$fstv_image_url = $fstv_html_matches[2];

$embed = '<embed src="http://www.fightstartv.com/wp-content/plugins/vipers-video-quicktags/resources/jw-flv-player/player.swf" height="300" width="600" bgcolor="0x000000" allowscriptaccess="always" allowfullscreen="true" flashvars="file='.urlencode($fstv_mp4_url).'&skin=http%3A%2F%2Fwww.fightstartv.com%2Fwp-content%2Fplugins%2Fvipers-video-quicktags%2Fresources%2Fjw-flv-player%2Fskins%2Fmodieus.swf&volume=100&bufferlength=3&backcolor=0x000000&duration=-1&frontcolor=0xffffff&lightcolor=0xffffff&screencolor=0x000000&autostart=false&image='.urlencode($fstv_image_url).'<as.cc=zerfildkisjwvgk<as.height=268<as.width=700<as.y=0<as.visible=true<as.x=0&adtvideo.height=268&adtvideo.y=0&adtvideo.position=over&adtvideo.width=700&adtvideo.visible=true&adtvideo.x=0&adtvideo.config=http%3A%2F%2Fwww.fightstartv.com%2Fmy_config.xml& adttext.height=268& adttext.width=700& adttext.y=0& adttext.visible=true& adttext.x=0&adttext.y=0&adttext.x=0&adttext.height=268&adttext.visible=true&adttext.config=http%3A%2F%2Fwww.fightstartv.com%2Fadttext.php&adttext.width=600&plugins=hd-2,viral-1,ltas,adtvideo, http://plugins.longtailvideo.com/5/flow/flow-2.swf, adttext,adttext"/>';

echo $match[0];

return apply_filters('embed_fightstartv', $embed, $matches, $attr, $url, $rawattr);

}

But these videos show up as just links in the film gallery instead of sporting the fancy FancyBox popup. Luckily, thumbnails do work, but more due to a quirk than by actual design. 🙁 This is going to take some time to cleanup, because most of the code concerned is terribly YouTube-centric by design.

Latest video on the homepage

The homepage features a block with the latest video. From my previous notes on this subject: “[… T]o show the latest video on the homepage, I just perform a search for posts which contain a YouTube URL. Then I parse the content a bit, and include the YouTube ID in my own low-res embed code. (The latest video area on the homepage is smaller than the default embed created by WordPress.)”

As of late, no video had been showing there, making me suspect—No I’m not telling you that. It’s actually too embarrassing!

Block with(out) latest video on the home page

This had everything to do with a missing hyphen character in the video id character class in the YouTube URL matching regexp within the scraping function:

preg_match('|http://www.youtube.com/watch\?v=([-a-zA-Z0-9]+)|', // better

preg_match('|http://www.youtube.com/watch\?v=([a-zA-Z0-9]+)|', // worse

With the hyphen added:

Block with latest video on the home page

Making post thumbnails crop from the top

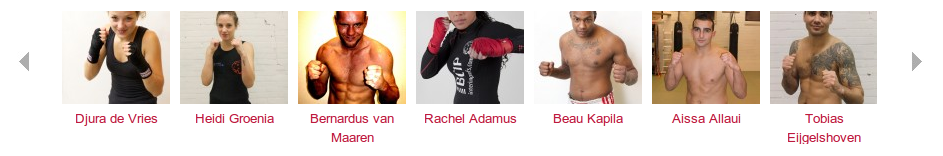

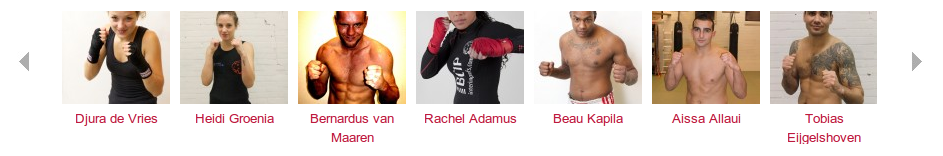

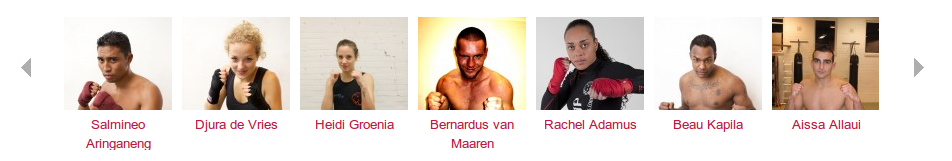

WordPress hard crops uploaded images to match the post thumbnail size (set with set_post_thumbnail_size()) and the other alternative image sizes (added through add_image_size()). It does this from the center. This gives horrible results in the common case that a person does not happen to be decapitated and holding his or her head in his or her hands:

In the image editor, there is the ability to edit the thumbnail separately from the actual image, allowing you to manually crop the thumbnail, but this is cumbersome and only allows you to choose between applying the changes to all image sizes, just the thumbnail or to everything but the thumbnail, and I happen to not be using the thumbnail for the fighter profiles.

Basically, what I want is for WordPress to hard crop from the top instead of from the middle of the photo. And this would be an ok default for all photos. I truly don’t get why this isn’t configurable by default.

I found someone suggesting to change image_resize_dimensions() in wp-includes/media.php, because, at the time that he encountered the problem, there were apparently no plugin hooks available. There is a filter now, since WP version 3.4, called “image_resize_dimensions”. A quick Googling for this function/filter name reveals that, apparently, the Codex now even has a ready-to-go recipe to crop thumbnails by keeping the top of the image, instead of the default center.

With this code up and running in a plugin called ‘top-crop’, I consider this problem fixed. No more looking at beheaded fighters and no need for my users to muck around with the image editor (which wouldn’t work anyway because I use to many different image sizes).

My trusty old regenerate-thumbnails and the new AJAX Thumbnail Rebuild are doing their job after me chasing ghosts for hours because of a silent file permission problem on the development web server.

Aihato fighter profiles with heads

Relief and anticipation

In retrospect, digging back into the spaghetti isn’t as bad as it could have been, partly because I spent an extraordinary amount of time on documenting all the bumps in the road before. After reacquainting myself, I’m actually less intimidated by the prospect of upping the awesomeness of www.aihato.nl than I was when I actually delivered the product, which goes entirely against the saying at the beginning of this article. Either I’m smarter now than I was a couple of years ago, or I exercised sufficient constraint in introducing complexities (although (mostly well-documented) idiosyncrasies abound).

Recent Comments